AI is now embedded in almost every part of a modern organisation. Product teams are shipping AI features, marketing experiments with generative tools, vendors quietly add “smart” capabilities, and engineers plug models into internal workflows.

For privacy and AI governance leaders, this is not just a technology trend, it is an operational problem. They are expected to:

- Keep an accurate register of all AI systems, including shadow AI

- Classify systems under frameworks like the EU AI Act risk scheme, from Unacceptable to Minimal Risk European Parliament+1

- Run AI impact or risk assessments, often on top of DPIAs, TIAs and LIAs

- Align AI use with internal policies, legal advice, and security controls

- Produce documentation and evidence for regulators, customers, and auditors

Trying to do this with spreadsheets, ad hoc forms, and scattered policy documents quickly breaks down. This is where AI governance platforms come in. They help you see where AI is used, how risky each system is, which controls are in place, and what needs attention next.

Below are five AI governance platforms worth considering as you prepare for 2026 and the practical impact of the AI Act and other emerging regulations.

Top AI Governance Platforms

Here are some of the most reliable platforms for AI governance for 2025 and 2026 alike.

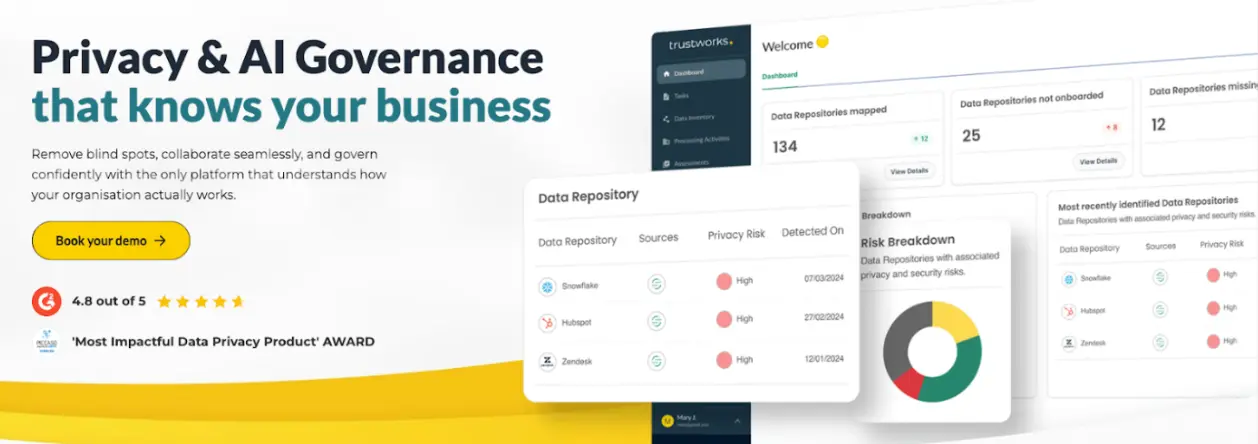

TrustWorks

TrustWorks AI Governance platform is an award-winning solution that makes AI governance smarter and helps organisations to achieve regulatory compliance with the EU AI Act and the coming wave of AI regulations. It leverages no-code, easy-to-implement automations, AI, and promotes cross-functional collaboration within the organisation.

It sits on the same foundation as its privacy modules, so AI governance is not a standalone tool, it is connected to your RoPA, data map, vendor ecosystem, and assessment workflows.

This makes it especially relevant for privacy, legal, and risk teams that now own AI governance but already manage GDPR and other privacy obligations.

Key AI governance features

- AI use case discovery and register

Uses integrations, vendor analysis, and source code connections to detect AI capabilities in third-party tools, internal code bases, and ongoing projects. These are pulled into a central AI systems register so you can see everything that is in play, including shadow AI. - EU AI Act risk classification

Classifies AI systems according to the EU AI Act risk framework, suggests categories such as Unacceptable, High, Limited, or Minimal Risk, and highlights key risk factors and mitigation measures. - AI Risk management and assessments

Provides predefined controls mapped to AI Act requirements, lets you assign mitigation measures, and manage conformity assessment workflows from the same platform you use for DPIAs, TIAs, and other privacy assessments. - Audit log and reporting

Gives you an audit trail of decisions, approvals, and control implementation, with dashboards and exportable reports that support regulatory submissions and internal oversight. - Tight link with privacy operations

Because AI governance sits alongside RoPA, data mapping, DSR automation, and vendor risk, you avoid duplicating records. One change in a system, vendor, or processing activity is reflected across both privacy and AI views.

Additional benefits for privacy and legal teams

- Built and hosted in the EU, with personal data staying within the EEA, which simplifies data transfer questions for legal and compliance teams.

- EU-based support and a community that spans multiple jurisdictions, so teams get help that understands both European regulation and global operations.

Why it stands out

TrustWorks acts as an AI governance and privacy automation platform, not just a layer on top of models. It brings AI system discovery, risk classification, and lifecycle governance into the same operational environment that privacy teams already use to manage RoPA, DSRs, assessments, and vendor risk.

It is also one of the first serious alternatives to legacy privacy platforms that have dominated the market for years. According to TrustWorks, around 70% of its customers have migrated from an older legacy solution, which shows how well it fits organisations that have hit the limits of their current tools and need a more modern, flexible approach.

TrustWorks is a strong choice for scale-ups and enterprises that want to treat AI governance as an extension of privacy and risk operations, especially in complex, multilingual, and multi-jurisdictional environments where manual tracking is no longer realistic.

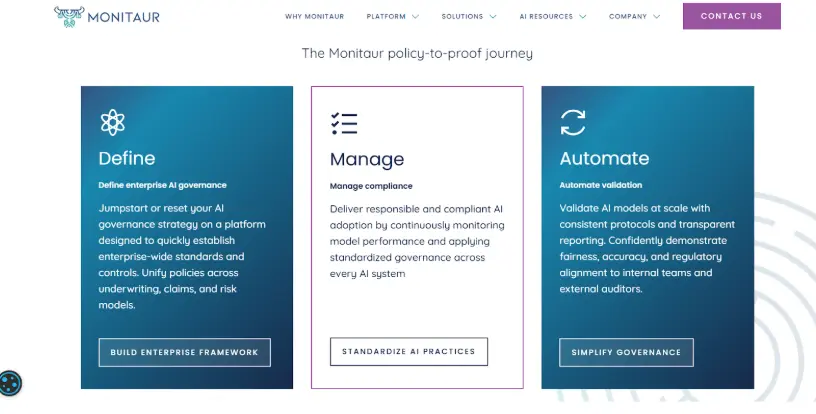

Monitaur

Monitaur focuses on model governance, helping organisations move from high-level AI policies to day-to-day practice. It offers a policy to provide a roadmap that ties governance frameworks to concrete controls, model validation, and monitoring.

This is well-suited to teams that already have model pipelines in production and need an auditable system of record around how those models are governed.

Key features

- Governance framework translation into actionable controls and workflows, creating a single place to capture policies, responsibilities, and evidence.

- Pre-deployment validation, with automated checks and scorecards that show whether a model meets performance, fairness, and compliance thresholds before going live.

- Continuous monitoring for drift, bias, and performance issues, with alerts and dashboards that reduce manual oversight effort.

- Controls library, which lets teams define once and apply control requirements across many models and use cases.

Why it stands out

Monitaur is a good fit if your main concern is model governance and auditability. It gives risk, compliance, and data science teams a common framework, so they can prove how models were approved, monitored, and adjusted over time, without having to piece that story together from different tools.

Fiddler AI

Fiddler AI is an AI observability and security platform that supports responsible AI across traditional ML models, generative AI, and more complex agentic systems.

It focuses on real-time visibility, explainability, and safety guardrails, and is typically used by teams that already have significant AI in production and need deep insight into how those systems behave.

Key features

- Unified AI observability, monitoring data drift, performance degradation, and anomalies across models running in cloud or on-prem environments.

- Explainability tools using methods such as SHAP and Integrated Gradients, to show which features influence particular decisions and support root cause analysis.

- Bias and fairness checks, with metrics and visualisations that help detect and understand disparate impact across different user groups.

- LLM and GenAI safety, including guardrails that scan prompts and responses for toxicity, PII leaks, hallucinations, and other unwanted behaviour.

Why it stands out

Fiddler AI is especially relevant for organisations where AI is already business critical and where model risk must be monitored continuously. It gives data science, MLOps, and risk teams a shared platform for visibility and analysis, and can complement broader AI governance platforms by providing deeper technical observability.

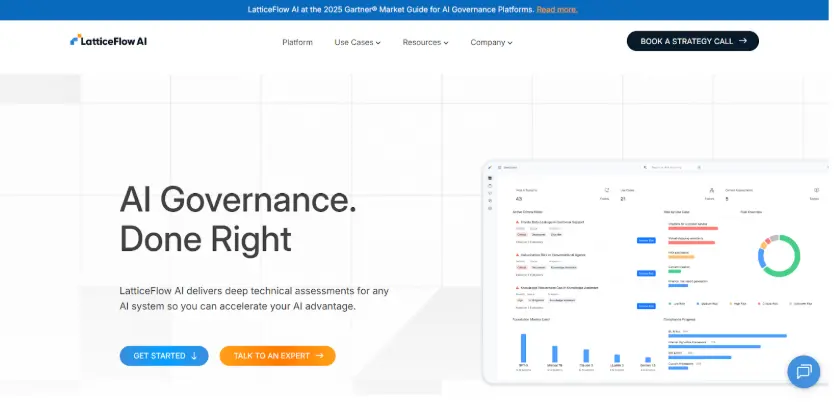

LatticeFlow

LatticeFlow is an AI governance and risk management platform designed first and foremost for AI developers and ML engineers who need to prove that their models are safe, robust, and compliant. It goes beyond checklists and policies, running deep technical evaluations on AI systems and turning the results into evidence that risk, compliance, and audit teams can actually rely on.

It is particularly useful in organisations where models are already in production, and developers are under pressure to demonstrate alignment with frameworks like the EU AI Act without slowing down delivery.

Key features

- Evidence-based technical assessments that run rigorous, automated checks on models to uncover safety, robustness, bias and cybersecurity issues, rather than relying on manual spot checks.

- LLM Checker for EU AI Act compliance, which evaluates large language models across dozens of benchmarks, including toxicity, bias and security, and highlights where they fall short of regulatory expectations.

- Developer-centric workflows to help AI engineers diagnose and fix data and model issues, building on LatticeFlow’s origins as an ETH Zurich spin-off focused on robust and trustworthy AI.

- AI governance engine integrations, allowing LatticeFlow to plug into existing GRC and trust platforms so that technical assessments feed directly into broader risk and compliance processes.

Why it stands out

LatticeFlow stands out by meeting AI developers where they are. Instead of asking teams to write more documents, it gives them tools to systematically test models, surface concrete risks, and generate regulator-grade evidence that compliance, risk, and audit teams can use.

If your biggest challenge is technically validating models for safety and EU AI Act readiness, and giving developers a structured way to check and document risks, LatticeFlow is a strong option to have on your AI governance shortlist.

Holistic AI

Holistic AI provides an AI governance platform with a strong focus on EU AI Act readiness. It offers tools that help organisations classify systems under the Act’s risk scheme and align controls with the specific obligations that follow from each category.

Key features

- AI Act risk calculators and assessments that help identify whether systems fall under Unacceptable, High, or other risk levels, and what obligations apply.

- Governance workflows to operationalise AI risk management and documentation in line with EU AI Act requirements. EU AI Act

- Regulatory updates and guidance, supporting teams as the AI Act and related guidance evolve.

Why it stands out

Holistic AI is relevant for organisations that have a strong EU presence and want a focused way to align AI use with the AI Act, without building everything from scratch internally.

Summing Up

Choosing an AI governance platform should start with the work you actually need to do. Ask yourself:

- Do you mainly need to discover and classify AI systems for the AI Act

- Is your biggest risk the behaviour of complex models already in production

- Do you need a standards-driven management system around policies and controls

- Or do you want AI governance integrated into your existing privacy operations

Among the platforms mentioned here, TrustWorks stands out as the AI governance module is built for EU AI Act compliance and all the AI regulations, focusing on providing context-aware governance. If your organisation is already investing in modernising privacy operations and needs to get ready for the EU AI Act at the same time, TrustWorks is a logical starting point for your AI governance shortlist.